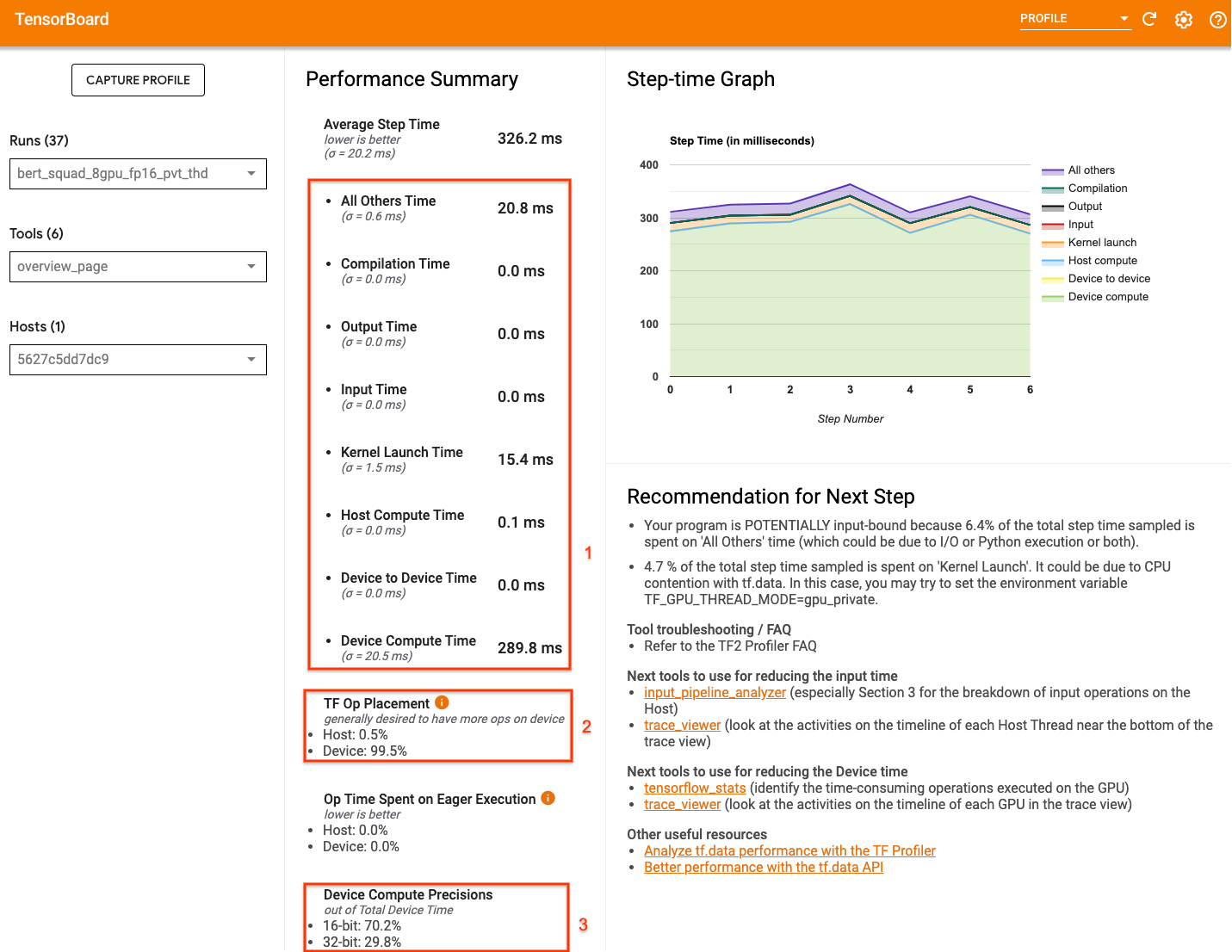

Tracking system resource (GPU, CPU, etc.) utilization during training with the Weights & Biases Dashboard

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

How to Track Your GPU Usage During Machine Learning - Data Science of the Day - NVIDIA Developer Forums

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

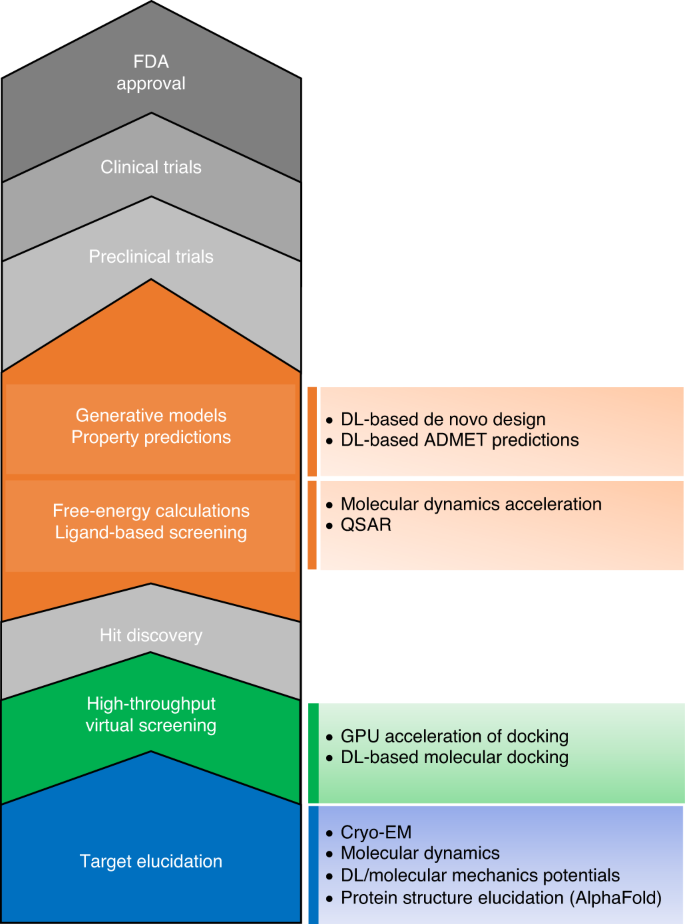

The transformational role of GPU computing and deep learning in drug discovery | Nature Machine Intelligence

![What is a GPU vs a CPU? [And why GPUs are used for Machine Learning] - YouTube What is a GPU vs a CPU? [And why GPUs are used for Machine Learning] - YouTube](https://i.ytimg.com/vi/XKOI9-G-wk8/maxresdefault.jpg)